We believe that technology only becomes truly valuable when it contributes to people and society. That’s why we always look for ways to make existing systems smarter without collecting more data than necessary. Our project for 99gram.nl is a great example of that.

From forum data to valuable insights

The 99gram forum has been around for nearly 15 years. Beneath the surface lies a wealth of information: thousands of posts and replies carefully moderated over time. What makes this data special is that it already comes with human labels. A moderator has assessed whether each message was safe, inappropriate, or spam.

That makes this dataset ideal for use in a machine learning model. Not to collect more data, but to make better use of the reliable data that already exists.

A custom model instead of sharing data

Of course, we could outsource this task to a Large Language Model (LLM) like OpenAI. But that brings two main issues:

- Privacy: you might end up sharing sensitive data with an external party (outside the EU).

- High cost: there are recurring expenses involved.

That’s why we chose a different approach: training our own classification model, specifically for 99gram. This way, all data stays local, in a secure and controlled environment, fully in line with our ISO 27001 standards for information security.

Requirements for responsible training

Before we started, we defined strict requirements for both the dataset and the process:

- The data had to be clean: deleted or sensitive messages were filtered out.

- No personal information was allowed in the training data.

- The quality had to be high: 99gram’s moderation history made that possible.

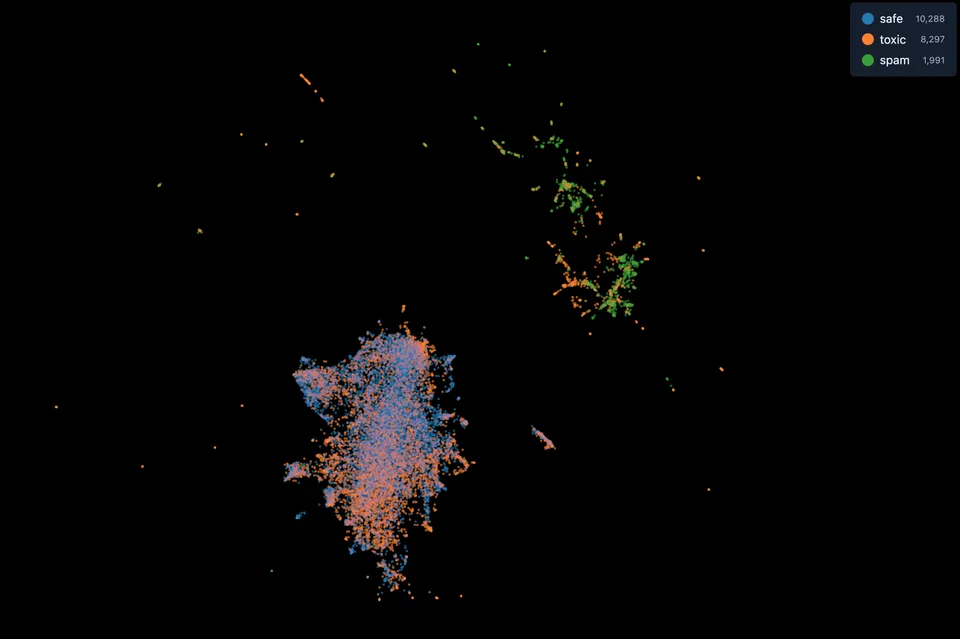

This resulted in a naturally labeled training set with reliable categories:

- Safe: messages that are publicly visible online

- Toxic: messages that were removed by moderators

- Spam: messages that were blocked by automatic filters

From raw data to a trained model

Using this dataset, we built the model step by step. First, we collected all relevant topics and responses from the database. Then we cleaned the data and split it into two sets:

- A training set to teach the model how to recognize what’s safe or harmful

- An evaluation set to test its performance and make adjustments

For training, we used an embedding model, the same technology we previously used successfully at AcademicTransfer. Thanks to rapid advances in this field, we can now run powerful AI models without massive infrastructure, right within our own environment.

Inspiration

This prototype immediately sparked new ideas:

- Real-time moderation: new posts and replies are automatically reviewed before going live, helping keep the forum safe without losing human oversight

- Support for counselors: the model can classify messages and flag signs of concern or risk, allowing support staff to respond more quickly

- Training for counselors: the model can simulate realistic chat conversations based on actual forum posts

What this shows

This project demonstrates how AI and Machine Learning can be applied responsibly in business-critical applications and public platforms. By making smart use of existing data, we create systems that are safer, more sustainable, and cost-effective, without relying on external AI services.

At Leukeleu, we believe this is the future of software development: technology that doesn’t demand more data, but does more with reliable data.

Want to know more or have any questions?

Keep informed!

More articles

- Seven lessons from my renovation that also apply to software development

- Impact Mapping: Turning software into real business value

- Code with a soul

- How can we become less digitally indifferent?

- Responsible use of AI

- Experimenting with Vibe Coding: a new way of working

- django-hidp: A Complete Authentication System for Django Developers

- How Leukeleu's contribution to open source strengthens your digital security and privacy

- Digital sovereignty

- ISO 27001 certification replaces hope with certainty